Image Moderation AI is on trend in today’s digital world, approximately 3.2 billion images are shared daily across the internet, according to The Conversation and Light Stalking. This includes images shared on dating platforms, social media platforms like WhatsApp, Snapchat, Facebook, and Instagram, as well as those uploaded to online storage services. Maintaining a safe and respectful environment has become a growing challenge. This is where Image Moderation AI steps in as a game-changer.

Whether you’re building a dating site, a social media app, or a user-generated content platform, AI-powered image moderation is a must.

What is Image Moderation AI?

Image Moderation AI refers to artificial intelligence systems designed to automatically analyze and filter images based on predefined rules or machine learning models. These tools can detect explicit content, violence, hate symbols, spam, and more, without human intervention.

Unlike manual moderation, which is slow and labor-intensive, AI-based moderation provides real-time results and scales efficiently as content volumes increase.

Here are the main types of businesses that benefit from AI moderation:

- Dating Platforms: Users upload profile photos, bios, and messages. AI moderation helps block nudity, fake profiles, or offensive content. The AI will filter explicit profile pictures or sexually suggestive bios automatically.

- Social Media Networks: The enormous volumes of images, videos, and comments are uploaded constantly. Manual moderation is not scalable. The Image moderation AI will detect hate speech, violent images, or harassment in comments.

- E-commerce Marketplaces: Sellers may upload counterfeit or prohibited items, misleading product photos, or offensive content. Here, the AI will block listings for illegal goods or adult products not allowed by site policy.

- Gaming Platforms: Player-generated avatars, chat, and media may include offensive or explicit content. To auto-detect explicit usernames, profile pictures, or chat messages, the Image Moderation AI comes in.

- Virtual Events & Webinars: The submitted ad creatives must comply with legal, moral, and brand safety standards. To detect misleading images, adult content, or banned categories, the Image moderation AI plays a vital role..

- Any platform subject to legal or community standards: If your business needs to comply with App store content rules, GDPR, Local regulations, or Brand safety and user trust, then the AI moderation is a smart investment.

Why Businesses Need Image Moderation AI?

- User Safety

Platforms that fail to remove inappropriate or harmful images risk exposing users, especially children, to disturbing content. AI moderation ensures instant protection. - Brand Reputation

Offensive or graphic content can damage your brand’s credibility. Automated image screening helps maintain a clean and professional user experience. - Regulatory Compliance

Governments and app stores are enforcing stricter content policies. Utilizing AI moderation enables you to stay compliant with content guidelines and avoid penalties.

- Scalability

Whether you’re a small app or a global platform, Image Moderation AI can handle millions of image submissions without added human costs.

What can Image Moderation AI detect?

Modern AI moderation tools are highly advanced and can be trained to accurately identify a wide range of harmful or inappropriate content. This includes nudity and adult content, such as explicit images or suggestive poses that violate platform guidelines. They also detect violence and gore, including graphic injuries, blood, or disturbing scenes that could be traumatic for viewers. In addition, AI can recognize hate symbols or offensive gestures, like swastikas, racist imagery, or gang signs, ensuring your platform remains respectful and inclusive. Lastly, AI systems are effective at filtering out spam images, including promotional graphics, fake profiles, clickbait content, and deceptive visuals meant to manipulate users. These capabilities enable businesses to automate content moderation at scale while maintaining a safe and welcoming user environment.

Advanced models even provide confidence scores, allowing you to decide when to auto-reject, review manually, or flag content for further inspection.

Choosing the Right Image Moderation AI

When selecting an AI image moderation solution, consider the following:

- Accuracy: Does it reduce false positives and false negatives?

- Speed: Can it provide real-time moderation?

- Customization: Can you define your own rules or train it for your niche?

- Privacy: Does it comply with GDPR and data protection standards?

Need help implementing Image Moderation AI on your site?

Setting up Image Moderation AI may sound simple. Still, in reality, it’s a complex process that often requires expert guidance, especially if you want it to work accurately and efficiently within your existing system.

You’ll need to:

- Choose the right moderation model (pre-trained or custom)

- Ensure compatibility with your current tech stack.

- Handle privacy, data storage, and compliance issues

- Decide between real-time vs. batch processing.

- Manage performance and false-positive tuning.

For many businesses, integrating AI moderation into a live platform without affecting user experience can be challenging. That’s where having the right strategy and support makes all the difference.

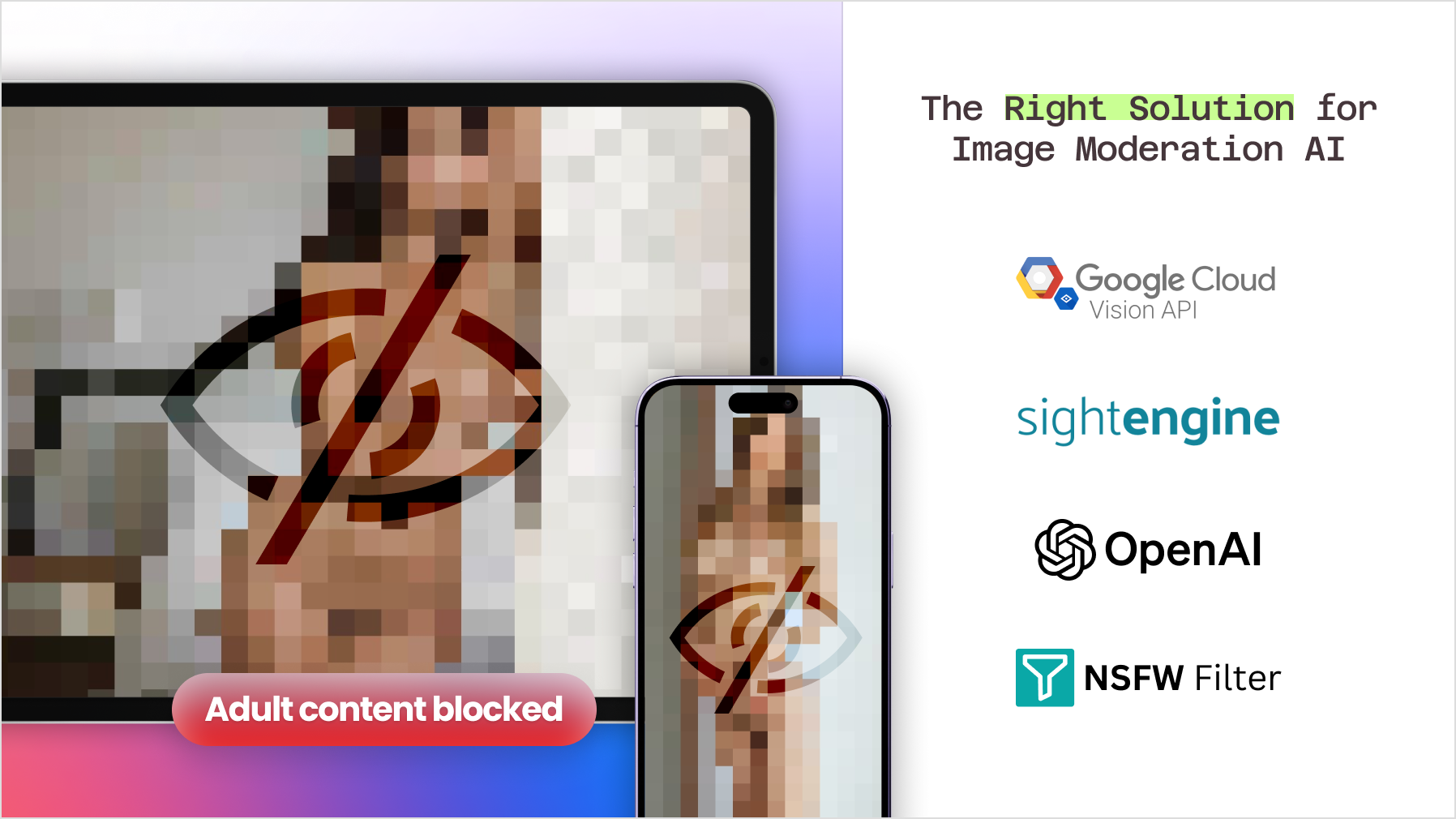

When it comes to implementation, you’re faced with two main choices from which you need to choose the Right Solution for Image Moderation AI:

- Third-party AI services like AWS Rekognition, Google Vision, or Microsoft Content Moderator

- Built-in open-source libraries like OpenCV, TensorFlow, or custom Python models

Each approach has its strengths, and the right one depends on your goals, scale, budget, and technical resources

Third-Party AI Services?

Benefits:

- No Maintenance Required: These services handle model updates and improvements for you.

- High Accuracy and Up-to-Date Models: Providers like Google Cloud Vision, Amazon Rekognition, and Sightengine utilize constantly evolving models trained on massive datasets.

- Low Server Load: Image processing is done off-site, which reduces the load on your own infrastructure.

Top-Providers:

- Google Cloud Vision API

- Amazon Rekognition

- Microsoft Azure Content Moderator

- Sightengine (Recommended for best moderation API performance)

- Imagga

Things to Consider:

- Ongoing API usage costs.

- Images are sent to external servers, which may raise concerns about privacy.

Built-In Scripts and Libraries

Benefits:

- No API or Subscription Costs: Once set up, these tools are free to use.

- Greater Privacy: All processing stays on your server, and no data leaves your environment.

- Full Control: Customize detection to fit your specific use case.

Popular Libraries:

- NSFW.js (JavaScript):

Lightweight and runs in-browser. Good for simple use cases, but not highly accurate. - OpenNSFW (Caffe):

Easy to implement, but outdated and not actively maintained. - NudeNet (Python):

Highly accurate (~92–95%), supports object detection, and batch processing. Requires Python and more server resources. - TensorFlow Custom Models:

Offers the highest level of accuracy and customization if you have the time, expertise, and training data.

Things to Consider:

- Requires setup and may require additional CPU resources.

- Some models (like NudeNet) demand a Python environment and can be resource-intensive.

- It may not match third-party services in accuracy without proper tuning.

In conclusion, integrating Image Moderation AI is a crucial step toward building a safer and more trustworthy online platform. Whether you’re managing a dating site, social media app, or e-commerce marketplace, the proper moderation solution can help you detect and filter harmful content at scale. However, the implementation can be technically demanding, especially when balancing performance, privacy, and user experience. That’s why it’s crucial to evaluate your goals, budget, and in-house capabilities before choosing your approach.

Our recommendation:

Consider third-party services like Sightengine if you’re seeking fast and accurate moderation without the need to manage infrastructure or train AI models. However, if privacy, cost control, and full customization are top priorities, and you have the technical resources, then built-in libraries like NudeNet may be the better choice. Either way, investing in the right AI moderation solution will ultimately enhance your platform’s safety, compliance, and credibility.